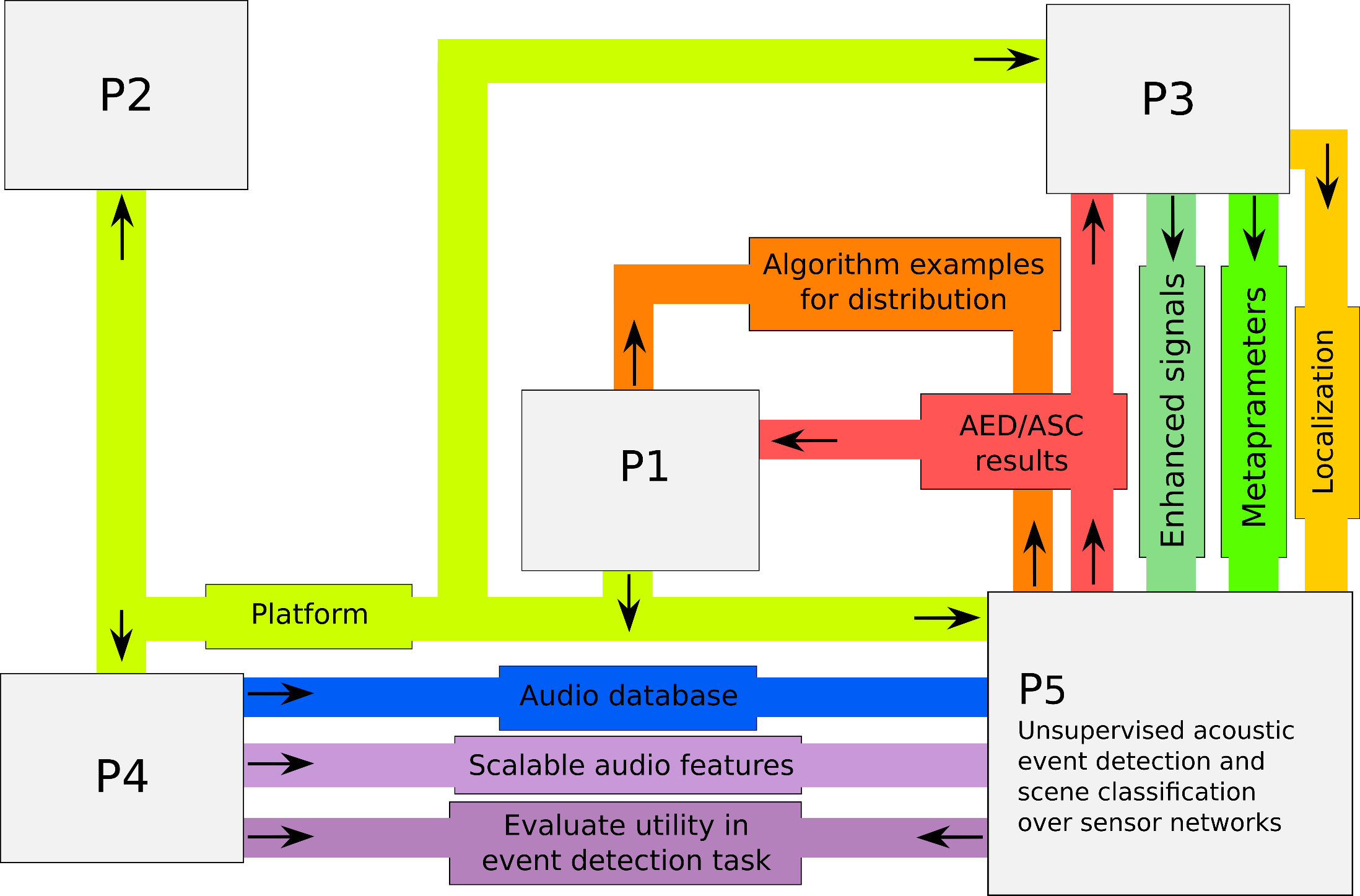

Unsupervised Acoustic Event Detection and Scene Classification over Sensor Networks

For many applications of acoustic sensor networks acoustic signal extraction and enhancement is not the eventual goal but only a necessary intermediate step on the way to classify and interpret the audio signals. In this project we are going to develop acoustic event detection and acoustic scene classification methods for the interpretation of the signals gathered from an acoustic sensor network. We are going to focus on unsupervised learning methods which increase the versatility and cost effectiveness of the system, compared to an approach that relies on carefully annotated training data.

Automatic audio event detection (AED) and acoustic scene classification (ASC) are two tasks falling under the umbrella of Computational Auditory Scene Analysis. The first, AED, is about assigning a label to a temporal region of audio, that people would use to describe the sound event (e.g., door knocks, footsteps, etc.). The task of the latter, ASC, is to associate a semantic label to an audio stream that identifies the environment in which it has been produced (e.g., busy street, restaurant, supermarket).

These technologies can be utilized in a variety of applications. The analysis of the audio track of multimedia documents can be used for automatic segmentation, annotation and indexing to support search, retrieval or summarization tasks. In monitoring and surveillance applications, such as biosphere, crowd or traffic monitoring, tools for the semantic analysis of audio can significantly ease and reduce the manual labor of, e.g., security personnel. The classification of acoustic events has also been investigated for unobtrusive monitoring in health care, urban planning, and ambient assisted living applications. Yet another application is the enhancement of the interaction capabilities of service robots by augmenting their context awareness through AED/ASC. Finally, acoustic scene classification is used in hearing aids to set parameters of the aid depending on the characteristics of the acoustic scene.

Recently there has been increased research activity in the field of semantic analysis of audio. This can be attributed in part to the fact that huge amounts of audio and multimedia data can be easily recorded and are available on the internet. Evidence of the growing interest are several challenges that have been conducted in this field. One of the first was the CLEAR evaluation which was carried out in the context of the CHIL project.

The series of TRECVID video retrieval evaluations focuses on audiovisual, multi-modal event detection in video recordings. There are also challenges on bio-acoustic signals, such as bird song classification, unsupervised learning from bioacoustic data (bird and whale songs; see, e.g., the workshop at ICML 2014), or automatic classification of animal sounds (SABIOD machine listening challenge).

The IEEE AASP supported challenge on the detection and classification of acoustic scenes and events (DCASE) provided a good overview of the state of research in AED and ASC at that time (2013). It contained subtasks on ASC, AED with isolated and with overlapping sound events. Due to its success a second DCASE challenge is planned for 2016.

In most of these challenges and collaborative research efforts cited above the problem is constrained in some way or another. They almost all consider a supervised scenario, where labelled training data is available for each of the sound classes to be recognized. The data had been (manually) segmented into short utterances, each containing a single scene or isolated sound, sometimes also sound sequences and overlapping sounds. Finally, the number of sound events remained quite limited (on the order of a few tens) and was a priori known. While such constraints are legitimate or even necessary to come up with first commercially viable solutions, they touch only on the more fundamental issues:

- Variety of sounds and applications: General audio is a much broader field of signals than is speech, and even within a category there is large variety (e.g., dog barking).

- Data deluge: In (always on) surveillance systems a huge amount of unsegmented data needs to be processed in real time.

- Polyphony: Natural acoustic scenes are often characterized by several simultaneously active sound sources.

- Labeling problem: There exist only few labelled databases, and many potential applications lack appropriate labelled training data. Even for the existing databases, there is no agreed upon annotation scheme: labels are often ambiguous, their degree of abstraction and temporal resolution can be quite different, and the temporal alignment of the labels with the data may be poor.

Some, if not all, of these issues are quite different from what is the situation in automatic speech recognition (ASR), and therefore the tools developed in the mature field of ASR are a good starting point -- but not more.

In this project we wish to attack some of these quite fundamental challenges. Our working hypothesis is that un- and semisupervised learning techniques are a promising approach because they avoid or reduce the cost of collecting and annotating training data and bear the promise to cope with the large variety of sounds and applications by learning relevant features from the data and reveal the structure buried in the data.

Contact